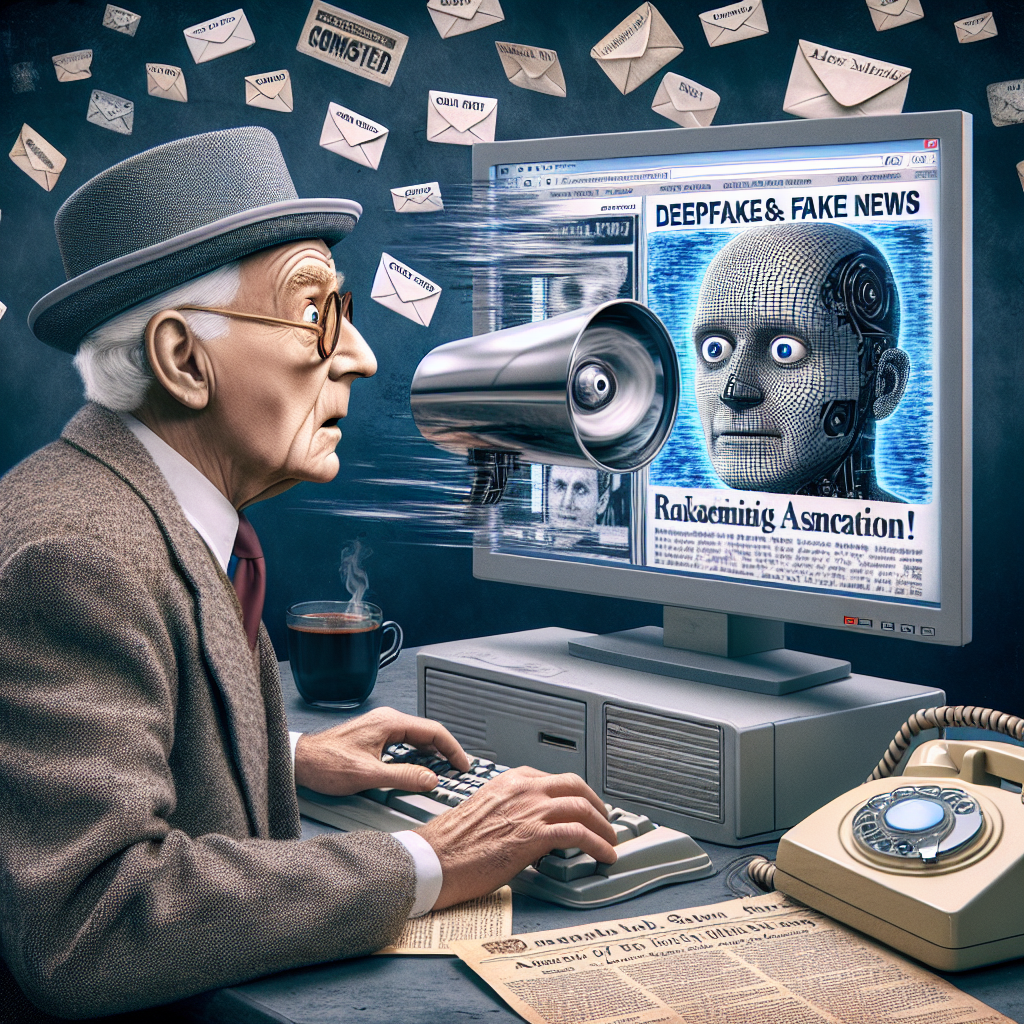

Deepfakes and Fake News: The New Cyber Threats You Can’t See Coming

Now, I’m no stranger to the wonders and worries that come with new technology. Grew up in an era of rotary phones and handwritten letters, so this whole digital mess is still a bit of a head-scratcher. But one thing’s clear as day: deepfakes and fake news have quietly crept into our lives, and they’re more dangerous than most folks give them credit for. It’s not just about gullible teenagers or knee-jerk social media rants; this is a full-blown challenge to how we understand truth in our everyday lives. And frankly, it’s enough to make a person wish for the simplicity of a newspaper and an honest-to-goodness editor.

Let's start with the basics. For those as technologically challenged as myself, a “deepfake” is a synthetic video or image where someone’s face or voice is digitally superimposed onto another person. In other words, it can make you believe someone said or did something they never actually did. Pair that with the avalanche of fake news—news stories engineered not for accuracy but for outrage, clicks, or outright misinformation—and you’ve got yourself a perfect storm of confusion and mistrust.

What’s truly unsettling is how seamless these deepfakes have become. Just a few years back, distorted videos were easy to spot—shaky edits, obvious mismatches, bad lighting. But now? They’re slick, polished, almost indistinguishable from the real thing. And when these are backed by fabricated news headlines, the average person—especially those of us who didn’t grow up swiping and scrolling—can hardly separate fact from fiction.

To be honest, it reminds me of my early days on the internet, dipping my toes in without a life jacket. It was already a wild place full of pop-up ads and half-truths, where you had to be cautious what you clicked on lest you ended up with a computer virus or worse. But now, the stakes are higher. Misinformation doesn’t just lead to a slow-loading webpage; it can alter elections, ruin reputations, and even incite violence. The invisible hands behind these deepfakes and fake news stories aren’t just messing with pixels—they’re meddling with minds.

What’s maddening is how easily these technologies can be weaponized. It no longer takes a hacker or a government spy to spread falsehoods. A savvy mischief-maker, or worse, a malicious actor, can craft a believable video of a political figure making outrageous claims or admit to crimes they never committed. Spread that on social media, fuel the outrage, and watch society fracture a little more with every share and retweet.

And let’s not forget how this affects everyday people. Say you’re a local business owner or a private citizen. A deepfake video showing you doing something scandalous or saying something inflammatory could go viral overnight, causing damage to your livelihood or personal relationships. Without the right tools and resources, clearing your name becomes a daunting task.

For all this gloom, though, there’s a silver lining—a chance for society to get smarter, not just technologically savvy but also discerning and critical. This means more than just relying on fancy computer programs to detect deepfakes or algorithms to flag fake news stories. It’s about cultivating a healthy skepticism and a habit of verifying before accepting, sharing, or reacting.

Now, I know what you’re thinking. Easier said than done, especially when the news cycle never sleeps, and outrage is just a click away. It’s tempting and even human to respond emotionally rather than logically. However, if we allow ourselves to be manipulated by every fabricated headline or doctored video, we hand over the keys to our collective sanity. We become pawns in a game played by those with no regard for truth or community well-being.

Education, therefore, becomes our best defense. Starting from school curriculums to public awareness campaigns, teaching media literacy is crucial. People have to learn how to spot red flags, cross-check sources, and understand the intentions behind a message. Unfortunately, not everyone has access to this education, particularly older generations like me, who didn’t grow up with these digital tools. But it’s never too late to learn, even if it feels like texting from a rotary phone sometimes.

On the flip side, tech companies bear a significant responsibility here. Their platforms act as megaphones for much of the content we consume. Algorithms designed to maximize engagement shouldn’t come at the cost of drowning truth in a sea of sensational lies. There’s a fine balance between protecting free speech and preventing the spread of misinformation, but ignoring the problem is no longer an option.

Lastly, there’s a broader societal question we have to face: how do we rebuild trust in an age where any video or news story could be fake? This is where community-level conversations, transparency from officials and media, and an emphasis on accountability play vital roles. We need to value truth not as a convenience but as a cornerstone of democracy and coexistence.

At the end of the day, this isn’t just a tech problem or a news cycle quirk. It’s a cultural crossroads. We can either continue down a path where reality blurs and chaos reigns, or we can choose to be informed, cautious, and committed to truth. As someone who’s mostly just trying to keep up, I’d vote for the latter—even if it means I have to ask a grandkid to explain what a deepfake is every now and then.

So, to wrap this up without sounding like an old fogey yelling at clouds: deepfakes and fake news are serious threats, not just to politics and media but to everyday life and personal integrity. Recognizing the problem is the first step, and tackling it requires a good dose of old-fashioned skepticism paired with modern vigilance. It might take some effort, but if we don’t act now, the truth might just become the hardest thing to find on the internet.